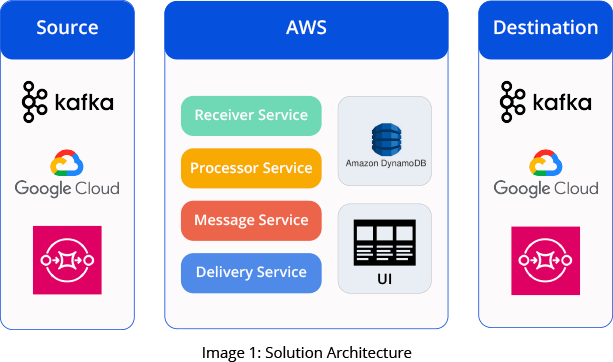

To meet the company’s needs, our Application Development and Quality Engineering teams worked together to develop a comprehensive node integration framework. This innovative solution facilitates seamless connectivity to various sources such as Kafka, Google Cloud Platform (GCP) topics, and S3 buckets while enabling HTTP protocol exposure. The solution offers the capability to consume messages across different protocols and subsequently process them.

We also implemented an automation testing framework that allows consuming a single message and publishing it to separate destinations. It also enables parallel transformations, which means applying different types of changes to the same message, as well as data filtering. Upon startup, all services connect to Dynamo DB, retrieve the configurations, establish connectivity, and begin consuming messages.

Here are the key components of our solution:

Processing encompasses transforming the received data into a diverse payload, enriching it with supplementary information, and publishing it to a specified destination. The destination can range from a Kafka topic, an alternative HTTP endpoint, or a GCP topic, leveraging Amazon DynamoDB for secure cloud-based data storage.

- Source: The new system handles four different protocols, including, at present, Kafka, GCP, HTTP, and the S3 protocol.

- Destination: We utilize four protocols here - NSP, GCP, HTTP, and Amazon Dynamo database (DB).

- Receiver Service: This component ingests messages from the Amazon S3 bucket and forwards them to Simple Queue Service (SQS) queues.

- Processor Service: This service extracts data from SQS queues. Depending on the message type, it transforms the message, appends additional information, and then publishes it to the outbound queue.

- Delivery Service: This service retrieves from the outbound queue and publishes to multiple destinations.

- Dynamo DB: Dynamo DB is a storage component that contains all the configurations.

- Status: This feature is useful for retrying failed payloads and tracking the state of messages.