Integrate NiFi, Airflow & Snowflake for Better Data Pipelines

Tired of messy data flows? Learn how combining Apache NiFi, Airflow, and Snowflake delivers a scalable, accurate pipeline for seamless data movement and consolidation.

To remain competitive and meet business goals, organizations must continue to re-examine their processes and identify areas of improvement. This continuous examination process helps companies identify new opportunities to gain efficiency, particularly in their big data flow.

The Apache NiFi project easily integrates with Snowflake—allowing businesses to make crucial decisions faster, provide better customer service, and reduce costs through real-time insights. In this article, we will discuss how data engineers can efficiently build data pipelines using Apache NiFi’s integration with Snowflake and Airflow. The solution helps in minimizing error potential, ensuring successful and error-free big data processing.

What is Apache NiFi?

Apache NiFi is a real-time open-source data ingestion platform that manages data transfer between source and destination systems. Apache NiFi can extract, transform, and load data with processors in a GUI-based drag-and-drop interface.

Apache NiFi doesn’t require additional coding, just its existing processors. It was built on NiFi (Niagara Files) technology, initially pioneered by the NSA, and then donated to the Apache Software foundation. With the release of its latest version, I.e., 1.13, Apache NiFi offers an active release schedule and a thriving developer community. The technology can handle anything that is accessible via an HTTPS request.

It supports several protocols, including HTTPS, SFTP, and other messaging protocols; retrieve and store files; supports around 188 processors; and allows a user to create custom plugins to support a wide variety of data systems. Table 1 depicts Apache NiFi features and benefits

| Highly Configurable | Apache NiFi’s high configurability allows for modifying flows at runtime. This capability helps users achieve efficient throughput, low latency, and dynamic prioritization. |

| Web-based User Interface | Apache NiFi provides an easy-to-use web-based user interface. Design, control, and monitor - all within the web UI without needing other resources. |

| Built-in Monitoring | Apache NiFi provides a data provenance module to track and monitor data flows from beginning to end. Developers can create custom processors and reporting tasks according to their needs. |

| Support for Secure Protocols | Apache NiFi also supports SSL, HTTPS, SSH, and other encryption protocols. |

| Flexible | NiFi supports dozens of processors and allows users to create custom plugins that support a vast number of data systems. |

What Do Airflow and Snowflake Bring to the Table?

While NiFi is great at ‘heavy lifting’ tasks, such as transforming and loading massive amounts of data, Apache Airflow excels at scheduling and monitoring tasks. Airflow lets you build data pipelines that automated workflows can manage.

Snowflake is a powerful hosted cloud platform that provides data warehouse and data lake capabilities. In addition, Snowflake includes analytics tools that facilitate collaboration, data engineering, security, and machine learning.

Combining these two tools with Apache NiFi lets you define automated processes that can move data back and forth between Snowflake and other systems. Once the data is funneled into Snowflake, it opens the scope for deep analysis. Further, Snowflake has connectors to business intelligence dashboards such as Tableau.

How Can an Airflow – NiFi – Snowflake Integration Benefit Your Business?

All three technologies - Airflow, NiFi, and Snowflake - are incredible tools. When you combine the three, a powerful business intelligence (BI) platform takes shape.

Some business benefits are immediately evident, including:

Proactive system performance monitoring: You can have the integrated platform flow data into Snowflake to apply analytics. If certain thresholds are not met, you can have the system send out alerts and notifications, allowing a proactive response to performance degradation.

Sales trend analysis based on date and time or geographic area: Snowflake can store historical data. The integrated system can continuously provide up-to-date sales information. You can use this information to analyze sales trends by date, time, geographic area, or other desired criteria. This allows your business to react quickly to the changing sales landscape.

Tighter control over inventory movement: Tracking inventory movement can be a nightmare. NiFi can be used to ingest inventory data from multiple sources. Airflow can trigger and monitor workflows that move the data into Snowflake. From Snowflake, you can deploy BI dashboards to give your company a clear picture of the exact status of your inventory at any given time.

Near real-time access to accurate data: NiFi’s abilities include tying connectors that can consolidate data, avoid duplication, and ensure accuracy. This integrated system is highly scalable and extremely responsive. The result is near real-time access to accurate data.

Easy automated movement of data between systems: You can use Airflow for scheduling and monitoring workflows while NiFi does ‘heavy lifting’ tasks like transforming and loading data. NiFi includes drag-and-drop processors that allow you to move data easily between Snowflake and other systems.

Apache NiFi Key Terms

To accomplish the integration, you must first understand several key terms.

Table 2 summarizes these terms.

| Term | Explanation |

|---|---|

| Flow | Created by connecting different processes to transfer and modify data, if necessary, from one data source to another destination. |

| FlowFile | Represents each object moving through the system. |

| Process Group | Represents a group of NiFi flows that help users manage and keep flows hierarchical. |

| Connection | Creates a link between processors. |

| Event | Represents the change in a FlowFile while moving through a NiFi flow. It is tracked in data provenance. |

Limitations of Apache NiFi

For all its excellent features and benefits, NiFi does suffer from a few significant limitations. The first and most important in the context of big data is that NiFi is not a feasible solution for batch processing vast amounts of data. In our experience, the breakpoint is around 200 million records, depending on your database structure.

Another limitation is that NiFi does not support complex SQL query processing. Simple queries return results smoothly. However, processing slows down as you use sub-selects, add tables to a JOIN, or build complex WHERE clauses. Also, it is not a feasible solution for large-scale batch processing (e.g., 200M+ records).

NiFi uses an ‘At-Once’ delivery semantic, which means that if a query fails, NiFi will retry it. During the retry process, there is a chance that the query might succeed multiple times resulting in data duplication.

Putting It All Together

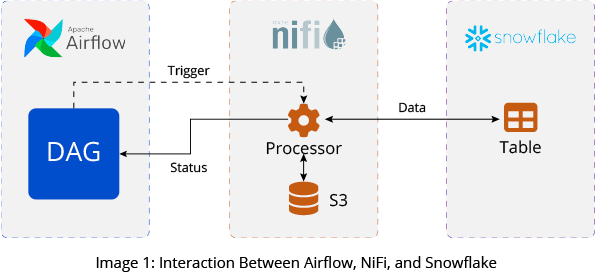

Now we can step back for a moment to get an overview of how the three systems interact together. First, an Airflow directed acyclic graph (DAG) triggers a NiFi Processor at regularly scheduled intervals. The NiFi Processor then connects to the Snowflake source to fetch data from a designated table. Apache NiFi can then publish data to its processor, storing data in an AWS S3 bucket.

Finally, NiFi sends a status back to the Airflow DAG. Image 1 shows the big picture.

Final Thoughts

Apache NiFi offers enterprises a real-time and open-source data ingestion platform, that can easily be used across disparate open-source environments. With an easy-to-use web-based interface, built-in data flow monitoring, and a wealth of configuration options, NiFi is an attractive option for teams working with real-time data. Its UI offers drag-and-drop processors for data ingestion - no coding is required.

The real power of NiFi is brought out when combined with Airflow and Snowflake. Airflow is a key component in the overall automation of data movement from source to target, allowing you to schedule tasks and perform monitoring.

NiFi includes easy-to-use processors that require no coding. Its drag-and-drop UI will enable you to quickly define “heavy lifting” tasks that can move massive amounts of transformed and accurate data into Snowflake. Snowflake can provide deep insights into the data, leading to timely and accurate business decisions.

Download the NiFi and Airflow Integration with Snowflake Here