As per a survey conducted by Gartner analysts, Brandon Medford and Craig Lowery, there is a wastage of approximately 70% of the cloud cost. There are many ways to reduce the overall cost of cloud services. In this blog, we will discuss some of the cloud cost optimization strategies that any organization can implement.

What is Cloud Cost Optimization? Why it Matters?

Cloud cost optimization is a process of reducing the total cost spent on cloud services by cleaning up unused resources and allotting the right-sized space to the computing services.

As cloud computing models evolve, the technology leaders must have a clear picture of products, services, and payment models required for achieving the best results. The public cloud is compelling, highly available, and easily scalable. At the same time, they can be costly if not managed properly.

The biggest issue is to track and control public cloud spending. In general, the organizations offering cloud services charge based on the usage. However, popular public cloud service providers, like AWS and Azure, charge the customers for the resources ordered rather than the resources consumed.

Best Strategies for Cloud Cost Optimization

There are many ways to identify and optimize cloud costs. Some of them are listed below.

Identify the Unused or Unattached Resources

Identifying and cleaning unused or unattached resources is the easiest way to optimize cloud costs. These are the resources or storage attached to an instance that is not in use anymore. Sometimes when administrators or developers create an instance to execute a function, they forget to turn it off after executing a job. It results in increased cloud costs due to excess use of storage.

Rightsizing Virtual Machines to Reduce Cost

Rightsizing allocates the right amount of resources to all virtual machines (VMs) in infrastructure, based on the workload, to achieve the minimum cost. Rightsizing of workload results in reducing the cost of hardware and software.

The rightsizing report or VM comparative economics report helps in analyzing the performance of VMs and suggest changes in resource allocation. Along with the rightsizing report, the Cloud Management Platform (CMP) gathers performance metrics of a VM by evaluating average resource consumption. If the average value is below a threshold, CMP suggests using effective VM instances.

Use Power Scheduling to Pre-plan Shut Down and Re-start an Instance

Cloud Management Platform (CMP) power schedulers help in configuring an instance to shut down and re-start based on a schedule. This scheduler helps in stopping the instances when not in use and the user is billed only for the time the instance has been used. In most scenarios, instances are not required to run 24/7. You can configure a Power Scheduler Group, as shown below, for running an instance from Monday to Friday during work hours.

Analyze Heatmaps to Determine Peak Usage Hours

Heatmaps are among the essential strategies used for cloud cost optimization. It is a visual representation tool that shows peaks in computing demand. This data is critical to estimate the start and stop timings of the instance to reduce the cost. On the basis of heatmap results, one can start or stop the instances manually or automate the process.

Virtual Machine Lifecycle Management for Automatic Decommissioning

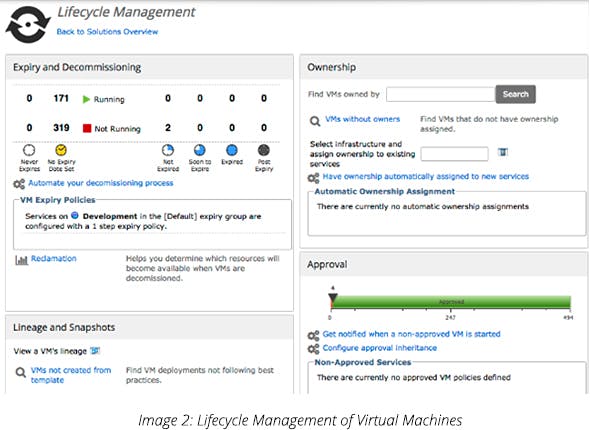

Lifecycle management is essential for public cloud cost optimization. Unmanaged VMs waste resources and the best way to regulate these VMs is by adding an expiration or decommissioning date for all the instances. It will change the organization’s cloud utilization from the ownership model to a rental model.

The instances are available only as long as they are required. An automation script can enable monitoring of the expiration dates and an e-mail can be triggered to the VM owner when the expiration date is near. If the instance is required further, the user can either extend the expiration date or it will get decommissioned automatically.

Purchase Reserved Instances (RIs) at Huge Discounts

Organizations committed to utilizing cloud services for a long term should invest in RIs. One can get massive discounts on the total price based on the upfront payment and commitment time. RIs can save up to 75% of the total cost, therefore utilizing RIs is a must for cloud cost optimization. RIs are available for one or three years in AWS and Azure. Hence, it is very important to analyze the historical usage and accurately estimate the future requirements.

Keep Optimizing the Cloud Cost by Analyzing Regularly

Cloud cost optimization is an ongoing process. However, by applying all the above strategies and regular monitoring of the instances, organizations can effectively manage the cost of their cloud-based infrastructure.

A Sample Cloud Cost Optimization Model

It is generally assumed that baselining VMs is the only way to reduce the cloud cost but many other aspects need consideration to save the cloud cost. Let’s understand this in detail.

The immutable deployment process can be followed to achieve a zero-downtime deployment. To run business applications 24/7, we can roll the upgrade process where systems get upgraded sequentially - separating the instance from the cluster, upgrading the application, and adding the instance back to the cluster.

The process may face some deployment failures that can destroy the whole instance and its back-end configurations (front-end and back-end rules, disk, health checks, load balancer, etc). During re-deployment, a new instance can be created with a dynamic load balancer.

Deleting External Load Balancers and Unattached Disk

In the process of destroying the instance, the instances get terminated but the external load balancers and disks that were assigned to the instances continue to exist, incurring more cost. Eventually, the count of these external load balancers and unattached disks increases along with the monthly billing. To overcome this, we can implement an automation process that identifies unused external load balancers and unused disks that are not attached to any running instance and delete them periodically, thereby reducing the overall cost.

Deleting Unused Storage Buckets

The first step in identifying unused storage buckets is to understand “What costs me money?” while using cloud storage. When analyzing the customer cloud storage use, it is crucial to consider:

- Performance: If the customers are located in a single region, it is preferable to host storage in the area closer to the customer. In the case of global customers, it is preferable to host multi-regional storage. This will help in decreasing the lag and increasing performance.

- Retention: The retention period of data type can be estimated by asking, “Is this object valuable?” and “For how long will this object be valuable?” These two questions will determine the appropriate lifecycle policy.

- Access Pattern: “How long you need the data?” and “How frequently you retrieve the data?” are the two questions that will determine the specific storage policy required for the customer.

We can perform activation and passivation of the environments whenever there is no use of the environments for cost-saving. Storage buckets assigned to the passivized environments are left unused. We can implement automation for generating reports on the last access details of a bucket and delete the buckets based on the requirement.

Command for listing all the buckets: gsutil ls

Command for retrieving details of a bucket : gsutil du -s gs://[BUCKET_NAME]/

Command for deleting a bucket: gsutil rm -r gs://[BUCKET_NAME]

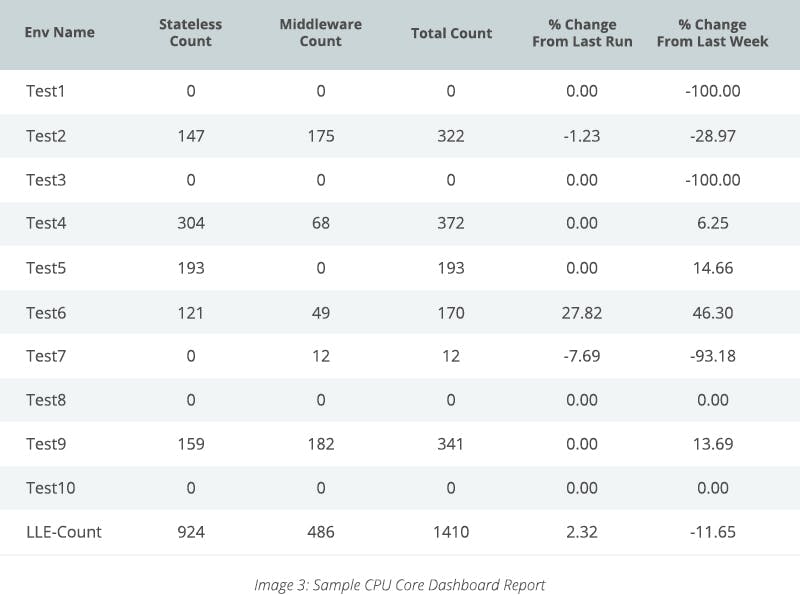

Creating Cores Dashboard

CPU core dashboard is a monitoring system that can be automated to monitor the number of CPU cores used in each environment. We can add a minimum and maximum threshold limits for the core count. The monitoring system notifies the project’s teams when the core count reaches a threshold. Every 8 hours, the dashboard generates a report on the core count of all the environments, along with comparison details of the previous report.

We can compare the core count with the previous week’s data. If there is an increase in the count, then we can inform the respective teams to take appropriate action. This enables the project to maintain the required number of cores and optimize the cost.

Observations:

Increase in 6.25% of Cores

Increase in 14.66% of Cores

Increase in 46.30% of Cores

Increase in 13.69% of Cores

Increase in 30.49% of Cores

Using Preemptible VMs/Spot Instances

Preemptible VMs are low-cost and short-lived. The lifespan of a preemptible VM is 24 hours. These VMs cost 80% less than the regular instances and the price of a preemptible VM is fixed.

Using preemptible VMs in non-production environments can lead to substantial cost savings. In case we have multiple VMs for the same component that are a part of the auto-scaling group, we can have other servers to take the load if any preemptible VM goes down after 24 hours. When preemptible VMs are a part of auto-scaling, they identify if there is a mismatch in the number of servers and create another preemptible VM in place of the VM that was shut down. That way, we can use the preemptible VM to save the cloud cost.

Preemptible VMs cannot be used in the production environment since it contains mission-critical applications. But in the places where minimal disruption is acceptable, preemptible VMs can be used – an ideal case would be for non-production environments.

Another use case for preemptible VMs are the places where we execute batch jobs. Batch job servers need not be running 24/7, but only when there is a need to execute a batch job. Hence, preemptible VMs can be utilized for scenarios like batch job execution.

Disk Baselining

The cloud instances that we create come with system managed and pre-defined baselines, which determine the level of compliance. We can implement a custom baseline called Disk Baselining. An automated monitoring script runs periodically and generates a detailed report of disk usage of an instance. By comparing the day-to-day disk baselining reports, we can re-size the disk space. This monitoring process provides statistical data on how much disk space should be allocated for each instance, thereby eliminating the unused disk space and reducing the cost.

Conclusion

Every growing organization is embracing the cloud and it is imperative to reach the market faster in response to the changing market dynamics. If not appropriately managed, cloud services can cost a lot as they follow a usage-based model. Hence, running unnecessary instances, poor allotment of disk space, and unwanted disks attached to instances that are not in use can increase your cloud cost. However, as long as cloud cost optimization strategies are followed, many unwanted infrastructure expenses can be easily avoided.