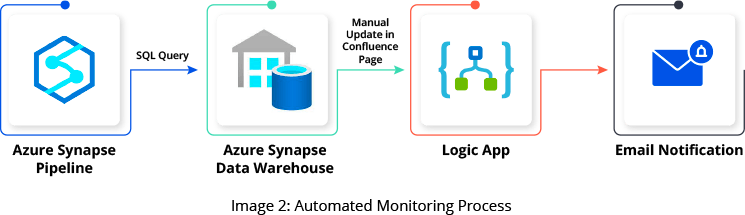

The company’s existing setup included Azure Synapse Analytics components, data pipelines, linked services, and API connections. Our Managed Services team ensured that the company didn’t incur extra costs in buying additional tools and leveraged these existing tools to build an automated solution that effectively monitors the pipeline and objects.

The team has integrated SQL monitoring scripts into the Synapse Analytics pipeline activity, enabling them to run on the internal Synapse data warehouse. The results are then fed into Azure Logic Apps, which triggers an HTML email notification sent to the designated distribution lists every two hours. By implementing an automated monitoring pipeline, the GSPANN team drastically reduced the efforts spent on manual monitoring.

Our engineers implemented automated monitoring in such a way that even the child pipeline failures were captured in the scheduled emails. This improvement also helped the team in knowing if any pipeline triggers were stopped.

Key aspects of our solution included:

- Identifying child pipeline failures: Child pipeline failures were previously going unnoticed.

- Early detection of issues: Pipelines and objects are now closely monitored every two hours. Any problems detected by the new system are handled directly within the two-hour window.

- Timely refreshing Power BI reports: The new system ensures that critical Power BI reports are completed on time despite massive data loads.

- Reusing the existing infrastructure: Our team decided to use the additional capabilities of the existing Synapse Analytics platform, saving the company costs it would have incurred in the procurement of additional infrastructure.