Data lakes are becoming increasingly popular tools for data-driven organizations. They offer an easy way to store, manage, and process massive volumes of data, giving businesses access to powerful insights. But, with so much data to manage, how can you be sure that the data you are collecting is of high quality?

That’s where Delta Lake comes in. Delta Lake is a powerful technology that enables businesses to ensure that their data is accurate and reliable, allowing them to make informed decisions backed by accurate data. It is an open-source storage layer that brings ACID (Atomic, Consistent, Isolated, Durable) compliance to Apache Spark and big data workloads. Companies spend millions of dollars getting data into data lakes. Data engineers use Apache Spark to perform Machine Learning and build recommendation engines, fraud detection, IoT, and predictive maintenance, among other uses. But the fact is that many of these projects fail to get reliable data.

Switching from a traditional data lake to Delta Lake gives you the tools to produce a scalable analytics implementation. The steady stream of high-quality data allows business units to ‘see further’, and to make accurate business decisions, improving company profitability.

Traditional Data Lake Challenges

Traditional data lake platforms often face these three challenges:

- Failed production jobs: Failed production jobs leave data in a corrupted state and require data engineers to spend tedious hours defining jobs to recover the data. Ultimately, it's often necessary to design scripts to clean up and revert transactions in such situations.

- Lack of schema enforcement: Most data lake platforms lack a schema enforcement mechanism. The lack of this ability often leads to the production of inconsistent and low-quality data.

- Lack of consistency: When you read data while a concurrent write is in progress, the result will be inconsistent until Parquet is fully updated. When multiple writes are happening in a streaming job, the downstream apps reading this data will be unreliable because of no isolation between each write.

Why Use Delta Lake?

The traditional approach to data lakes often involves storing large amounts of raw data in Hadoop Distributed File System (HDFS), with limited options to run transactions and other forms of analytics.

This limits the scalability of your analytics implementation and can be a costly process. However, Delta Lake is an open-source storage layer that enables you to bring ACID transactions to Apache Spark and big data workloads. By using Delta Lake, you can ensure that your analytics implementation is scalable, reliable, and consistent.

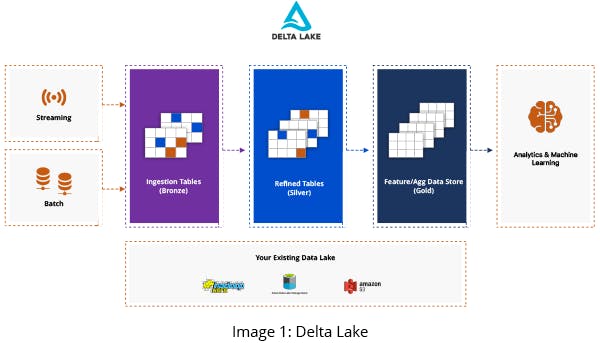

Delta Lake is also compatible with MLflow. Earlier, Delta Lake was available in Azure and AWS Databricks only. In this earlier incarnation, Delta Lake data was stored on DBFS (Databricks File System) which might sit on top of ADLS (Azure Data Lakes Storage) or Amazon S3. Delta Lake can now be layered on top of HDFS, ADLS, S3, or a local file system.

Delta Lake Features

Delta Lake provides a transactional storage layer that sits on top of existing data lakes and leverages the capabilities of Apache Spark to offer strong reliability and performance. When switching from a traditional data lake to Delta Lake, you can improve your analytics implementation with the following features.

- transaction support

- unified batch and stream processing

- schema enforcement

- time travel or versioning

These features make Delta Lake an ideal solution for improving the scalability, reliability, and consistency of your analytics implementation. With Delta Lake, you can ensure that your analytics processes are secure, consistent, and accurate. Let's first look at transaction support